For early internet users, the phrase “You’ve got mail” summons instant flashbacks to the era of screechy modems. But its legacy extends far beyond early net nostalgia — especially in the now-flourishing data center market.

In 1997, AOL built a data center atop a cheap plot of land near the corner of Pacific Boulevard and Waxpool Road, in Sterling, Virginia, to support AOL Mail — the first facility of its kind in the area. The site was mere miles from Dulles International Airport, which had drawn both power and fiber-optic cables to the area, and not far from MAE-East, one of the first major internet exchanges in the country. The area continued to invest in IT infrastructure as AOL grew, which attracted other data-center providers in kind.

Nearly a quarter-century later, AOL has receded into the margins, but the spark it lit in Northern Virginia is now a data infrastructure hotspot. The stretch known as Data Center Alley now accounts for the largest concentration of data centers in the world, with more than 160 centers spread across at least 18 million square feet. Locals are reportedly fond of saying that 70 percent of all internet traffic runs through the Alley, a refrain that speaks to its scope, even if the figure itself is apocryphal.

That epicenter growth is emblematic of a larger boom for the data center market. In North and South America, data center space grew more than 6 percent each year between 2010 and 2019. And although enterprise investment in data centers contracted due to the pandemic (a trend that is expected to reverse), companies like Google, Facebook and Amazon continue to invest in their hyperscale data centers at record levels. The number of hyperscalers worldwide is expected to soon surpass 700.

Why such growth? Consumption. Even though IT and infrastructure efficiencies have tempered the pace, as storage and compute usage rises, generally speaking, so too does infrastructure demand. That’s hardly surprising considering that data centers are the load-bearing beams that support just about everything we do online.

Data centers, for instance, power:

- Email and messaging.

- Artificial intelligence and machine learning.

- Software-as-a-service (SaaS) programs and desktop virtualization.

- Cloud storage and computing services, including databases and infrastructure.

- E-commerce transactions.

That said, if you never thought about where “the cloud” is, you’re hardly alone.

“There’s certainly a disassociation between the [digital] products that people use on a daily basis and how that’s essentially generated and delivered — probably more than almost any other product except for maybe electricity,” said Tim Hughes, director of strategy and development at data center provider Stack Infrastructure, and a former data-center site selection manager at Facebook.

Stack is a newcomer in the market, but even it bears the region’s history. The old AOL Mail data center was purchased in 2016 by Infomart, whose assets were acquired and relaunched as Stack in January of 2019.

Types of Data Centers

Whether or not we connect the dots, data centers remain the backbone of our increasingly online lives. And here, too, the tech giants dominate. Over the last two years, “the biggest growth in the U.S. hyperscale data center footprint has come from Amazon, Facebook and Google,” John Dinsdale, chief analyst and research director at Synergy Research Group, told Bisnow in November. “Together those three have accounted for over 80 percent of new hyperscale data centers that have been opened in the U.S.”

But the big names don’t always go it alone. Companies like Stack Infrastructure and Vantage Data Centers are part of a small-but-important ecosystem of companies that partner with major cloud providers to assist with builds and maintain the physical premises, if not the IT. They also build colocation data centers where enterprises who can manage (or contract out) their own server upkeep can rent rack space for their data storage. Both — colocation and cloud — operate at the hyperscale level.

Think of it as a landlord-tenant relationship, in which the tenant is responsible for everything on the server, and the landlord owns a sprawling site full of mechanical galleries, security measures, power generators and environmental controls — because running tens of thousands of servers under one roof generates a lot of heat that needs to go somewhere, it turns out.

Both Vantage and Stack declined to name the tech firms with whom they collaborate, but said partners include some of the largest tech companies out there.

“If you know who the hyperscalers are, then you know who’s probably a good candidate to lease our kind of space,” said Lee Kestler, chief commercial officer of Vantage and a longtime leader in the Northern Virginia market.

There are also so-called managed services data centers. They’re similar to cloud data centers, but allow clients to drill deeper into the stack. Cloud users rent instances, or virtual machines, to support resources like SaaS programs, memory, and database and messaging services. Managed services do the same but also give users access to the physical servers.

“Whereas with AWS, I just get access to the operating system, with a managed service provider, I actually get access to the hardware,” said Dan Thompson, a principal research analyst at 451 Research’s data center.

Why take that extra step? Some think it’s cheaper in the long run to buy servers and hand them off to a third party for maintenance, rather than pay for cloud instances. And there are also a handful of applications that use hardware security keys at the server level, so physical access becomes a must.

But more often, according to Thompson, it boils down to what he calls “server hugging” — the perception of added security afforded by not sharing infrastructure. “I just want to know that I have a physical box dedicated to me,” he said, explaining the rationale.

When looking across the data center landscape, it’s worth remembering that these different types of centers are hardly siloed. Workloads today are more fluid than ever.

For example, nearly half of companies in a recent 451 Research survey said they had migrated an application or workload off the cloud within the previous year. (Companies didn’t specify whether those repatriations were permanent, so it shouldn’t be assumed that what moves from the cloud stays off the cloud.) Reasons cited for shifting tasks included information security concerns, shifting from testing environments to production, and data sovereignty issues.

Companies are also still tackling application modernization initiatives — moving legacy apps to newer, containerized architectures — which plays a factor, too, said George Burns, a senior consultant of cloud operations at SPR. Migrating data and computing tasks between environments “has become increasingly more necessary” as companies make these pushes, he said.

Cost also plays a role. The news this month, reported by The Information, that Cisco Systems is looking to capitalize on frustration over high AWS bills by making it easier to move between cloud data centers and private ones, only underscores the entangled nature of data centers.

“If we look at the world today, it’s a very hybrid world,” said Thompson.

RelatedHow the Data Center Needs to Evolve for Modern Applications

What Goes on Inside a Modern Data Center?

Take a 20-minute walk south down Pacific from the historic AOL-turned-Stack facility and you’ll arrive at VA11, a 250,000-square-foot Vantage data center that is, in some ways, representative of broader data center approaches and, in others, more distinctive.

Built in 2019, VA11 is the first of five planned data centers on what will be a $1 billion, 142MW campus, stretched across 42 acres. It’s indicative of a long-term trend away from smaller data centers toward densely-built hyperscale designs, which has led to better energy efficiency compared to the old days.

Flooring layout is a big consideration in data centers. VA11 incorporates both of the two most common design options in different parts of the facility: raised floors and concrete floors. Raised floors, which hide cabling below your feet, are hardly unique to data centers. (Your office building may have them.) But the gaps between your feet and the real floor are deeper in data centers, with around two feet of airspace to help cooling. This flooring tends to be used with lower-power servers, which require less sophisticated airflow.

Even though the industry is, by and large, moving toward concrete slabs, water piping considerations — and a strong dislike of visible cable clutter – may lead some center designers to opt for some raised flooring.

Concrete floors are more efficient and advantageous, Kestler said. They allow you to bring in heavier servers, and all the wiring for both network and power lives above the racks, which simplifies access. (At VA11, that cabling is sheltered overhead by a Gordon ceiling grid, the assembly video for which is a fun watch for engineering nerds.)

Hughes noted that Stack favors concrete, but agreed that the call boils down to situational drivers. “It’s really a combination of the client’s preferences combined with the capabilities of the facility,” he said.

As you might expect, security is an important detail too. At VA11, that includes perimeter fencing, CCTV monitoring and 24/7 security patrols. Every door is alarmed and uses PIN, badges and biometrics for entry. Inside the infrastructure rooms, low-sensitivity smoke detectors “detect the smallest amount of smoke,” said Steve Conner, vice president of solutions engineering and sales at Vantage, in a walkthrough video, which also peeks into the mechanical gallery, generator yard, (perpetually, audibly humming) electrical room and other spots in the building.

Even though servers can motor along at surprisingly high heats, guidelines exist to make sure they run at optimal temperatures. To keep things cool, both Vantage Data Centers and Stack Infrastructure utilize what are called air-cooled chillers, which sit on the rooftop and bring cooling fluid via a closed-loop system into the data rooms while devices called Computer Room Air Handling (CRAH) units draw out heat.

In so-called hot aisles, where backs of server racks face each other, temperatures can sustain as high as 100 degrees Fahrenheit. “That heat gets evacuated up into a plenum, taken back to the air conditioning units, recycled and pushed back through — and the cold air comes out around 72 [degrees],” Kestler said.

Data Center Standards and Tiers

All these environmental and industrial design considerations exist to maximize operational efficiency, but what about the electrical layout? After all, few details are more important than the ability to keep the power on.

VA11 is a Tier III-quality data center, using the Uptime Institute standard, which is the most widely used system for classifying power resiliency in the industry. (The Telecommunications Industry Association’s standard is another option.) The Uptime Standard is a four-tier ranking — the higher the tier, the greater the redundancy.

Despite the fact that Tier IV data centers have the lowest expected downtime (though the Uptime Institute doesn’t specify an amount in its tier definitions), the cost that’s passed down to users isn’t widely believed to be worth the downtime savings. That’s why most data centers fall into the Tier III category.

Once a data center builder establishes a track record and becomes a trusted entity, the certification itself is essentially considered superfluous within the industry, particularly within the United States. (Certifications, even from established colocation builders, are considered more important outside the United States, in emerging markets, where clients may be less familiar with the providers.) There’s even less need to go through the process for tech firms who build data centers for their own operations, unlike colocations, which rent space.

“If I’m Facebook, building my own data center, I know what the heck I’m doing. I don’t need you to come in and verify I did what I say I did,” said Thompson.

Just How Much Energy Do Data Centers Really Use?

With data consumption forever climbing up, it’s reasonable to expect that data center energy usage has skyrocketed in kind — but that’s not necessarily true.

Research published earlier this year in Science magazine showed that energy-use growth in data centers slowed between 2010 and 2018, thanks to efficiency improvements in technology and infrastructure.

The growth in service demand was significant. More than a million servers were added to the global base, work loads and compute instances at data centers grew by 6.5 million, and the industry added 26 exabytes in storage capacity and 11 zettabytes per year in IP traffic, according to the report.

Yet at the same time, energy-usage growth was essentially flat — and, in fact, trended slightly downward.

Average power usage effectiveness (PUE) — the industry-standard measurement of energy efficiency — ticked down by 0.75 points. (PUE represents a facility’s total energy divided by IT equipment energy. The lower the number, the better — with 1.0 being best.) Also, server watt-hours per computation dipped by 0.24, and the average number of servers per workload fell by 0.19.

How was it possible? According to the research, gains in processor power and reductions in idle power usage drove the electricity-use-per-computation drop, while improvements in storage-drive density pushed the drop in storage-related energy usage. Machine virtualization and the ongoing trend toward hyperscale design were also factors.

The “simplistic,” “doomsday” projections that had portended catastrophic rises in energy consumption failed to take into account “the technology progress that occurred in parallel,” lead study author Eric Masanet told Built In.

Despite the unexpectedly reassuring assessment, the researchers simultaneously urged the industry and policymakers to reject complacency. “Diligent efforts will be required to manage possibly sharp energy demand growth once the existing efficiency resource is fully tapped,” they wrote, warning that data-center compute instances worldwide could double within just three years.

“You can go through Data Center Alley and see the big plumes of moisture that look like fog or clouds on a cold morning.”

The report concludes with three suggestions to make sure efficiency keeps pace with the growing demand: 1) Policymakers should further strengthen and promote efficiency standards for servers, storage and network devices. 2) They should also offer incentives to accelerate adoption, and further fund emerging tech that might bolster efficiency (such as ultrahigh-density storage and quantum computing). 3) The industry should continue to improve publicizing and sharing energy data and modeling capacities.

So has the needle moved at all since publication? Server efficiency standards are still progressing well, and there’s also been promising movement in China, Masanet said. In 2016, Beijing banned data centers that have a PUE of 1.5 or higher, and Shanghai recently issued a plan to cap data center PUE in the city at 1.3. At the same time, we still lack a strong grasp on the energy-intensiveness of artificial intelligence, and to what extent new edge-computing facilities will prize efficiency remains to be seen, he added.

The study also raises a question common to broader climate concerns: Just how much can we count on innovating our way out of the issue? Even though Moore’s law is indeed slowing down, that doesn’t mean there isn’t room for more technical improvement, Masanet said.

“What really matters is Landauer’s limit, which is the amount of energy it takes to do a binary switch of a transistor, and we’re still thousands of times less efficient than the limit implies,” he noted. “So there’s still a lot of technical room — whether it can be done with the materials we have or our ability to design chips in a more efficient way remains to be seen.”

There’s also “a huge amount” of low-hanging-fruit energy waste that could be targeted, like zombie servers. “A lot of data centers still run servers very inefficiently — they could virtualize, consolidate and just have far fewer servers running for the same amount of jobs they’re meeting today,” Masanet said.

RelatedEdge Computing Will Drive the Coolest Emergent Tech — as Soon as It Ramps Up

Power Usage Effectiveness Isn’t Everything

Masanet’s study takes into account far more than PUE — which is not insignificant. The metric has become the de-facto benchmark for energy efficiency in data centers, but it’s also been criticized for its lack of scope — having been described as “rusty” and (less charitably) “atrocious.”

Andrew Lawrence, a founding member of the Uptime Institute, an industry standard-setter, cautioned in November that PUE falls short in three key areas. First, the industry-wide trend toward greater site resiliency inevitably leads to higher PUE. Also, the metric fails to account for water usage effectiveness, which is notable as the big tech firms have moved toward evaporative cooling, which requires more water than other environmental-control techniques. And, perhaps most importantly, it doesn’t offer anything in terms of measuring IT computation efficiency.

“Energy-saving efficiency investments on the IT side, however, are not rewarded with a lower PUE, but rather a higher one (as PUE is the ratio of the IT to non-IT energy figures),” wrote Lawrence.

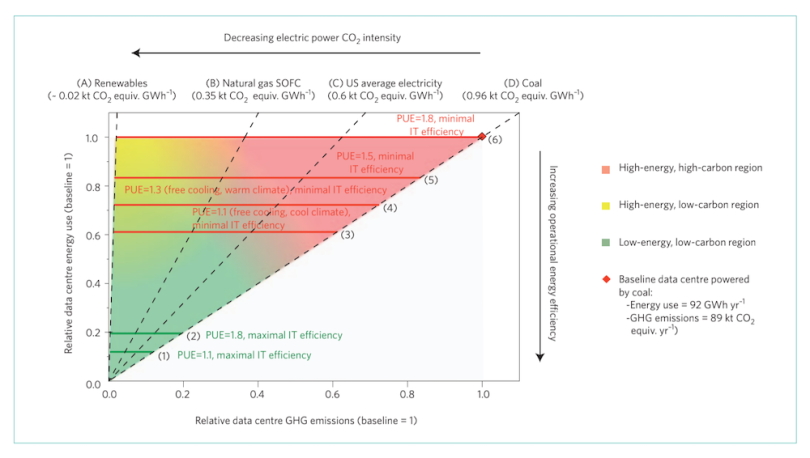

Along with IT efficiency, factors like local climate and power sourcing can problematize how the metric is understood. The visualization below, from 2013 research led by Masanet, shows it’s possible that a data center with a higher PUE (1.8) can actually use less energy and emit less carbon than a center with a better PUE (1.1) under certain conditions.

Most of the industry members and watchers with whom Built In spoke acknowledged the limitations of the metric while also noting that it has helped drive infrastructure improvements.

“It was a good start … but we need to continue progress,” Masanet said.

A metric called water usage effectiveness (WUE) was put forth in 2011 as a way to measure how much water data centers use. But even as the largest players move toward water-reliant evaporative cooling, WUE numbers are often hard to come by.

“You can go through Data Center Alley and see the big plumes of moisture that look like fog or clouds on a cold morning,” Kestler said. “Those are evaporative-chilled water plants releasing moisture in the air. Those data centers, while they’re highly efficient, use hundreds of thousands of gallons of water, potable or non-potable, a day.”

“Do we want the internet or do we not want the internet? At some point that’s fundamentally what the actual question is.”

It should be noted that many of the tech-giant data center providers are considered to have taken their environmental footprint quite seriously. Google and Microsoft have netted good marks, as has Facebook. The majority have “worked tirelessly to really reduce overhead energy use,” Masanet said.

But Facebook — which has stressed its substantial water mitigation efforts — appears to be the only big-tech data-center player that consistently reports WUE. Meanwhile, water usage numbers at Google are fiercely guarded.

Location, Location, Location

“In the Pacific Northwest” — which is one of the fast-emerging data-center markets in the country — “you could probably use [more] water, because there’s plenty of it,” Kestler told Built In.

Of course, latency expectations won’t permit data centers to be built only where it’s wet. So what factors drive site selection?

The two biggest drivers, according to Hughes, are safety and proximity to network exchange. For safety, low risk of natural disaster is important, but the region needs to be stable socially and politically too. “We take a lot of that for granted in the U.S., but the internet is a global thing,” he said. “There are places where you have to take those things into consideration.”

Even more important than things like construction costs or tax breaks is network reach. “Everything [else] could be great, but if you’re in the middle of a place that has no interconnectivity … then you’re just a box with a bunch of servers creating heat,” Hughes said.

Other differentiators include labor costs, tax incentives, access to talent and renewable energy, and cost of energy. “Certain parts of the country are a lot cheaper to buy a kilowatt hour of energy than others,” he added.

“If you’re in the middle of a place that has no interconnectivity … then you’re just a box with a bunch of servers creating heat.”

The actual process of securing a site can be a delicate, somewhat clandestine, dance.

Jennifer Reininger is a senior consultant at Environmental Systems Design, a firm that works with mission-critical industries on engineering and site selection. Its clients, she said, include the majority of hyperscale data-center providers.

Reininger told Built In that, even while a company might be negotiating with a municipality on things like tax incentives on one hand, a representative might also, say, only use their first name, or send emails from a non-work address during initial outreach, in efforts to avoid a bidding war or community backlash. At the beginning, “you definitely want to keep things more under hat,” she said.

RelatedHow Dropbox Optimized Its Storage System After Ditching AWS

It All Comes Back to Energy

In recent years, many data-center leaders have taken their site-selection research and development into novel waters. In one case, that meant literally dumping a data center into the ocean: Microsoft recently resurfaced a sealed, shipping-container-sized data center after dropping it to seafloor off the coast of Scotland more than two years ago.

Elsewhere, Google has built and expanded a data center inside a repurposed Finnish paper mill. Facebook set up a data center in the forests of northern Sweden, fewer than 70 miles from the Arctic Circle. And a Norwegian provider has facilities underground and in caves.

These projects have an irresistible air of whimsy, but — as attempts to harness the natural cooling elements of their environments — they’re all driven by the energy concerns described above. How to scale up sustainably remains the unavoidable challenge.

It’s a nuanced conversation, especially when we consider our own role as users. There’s no reason, for instance, to guilt ourselves about streaming far more movies and games while we social distance, especially as travel-related emissions plummeted.

But not succumbing to a collective disconnection about what actually fuels our digital habits (as we often do with food sourcing and electrical power) is probably good too — with the important caveat that such an understanding is by no means an invitation for industry or policymakers to shirk efficiency-innovation responsibilities.

“Data centers are driving energy consumption, but the underlying value proposition of the internet is driving data center growth … and it’s being done in the most efficient way possible,” said Hughes, who, prior to joining Stack, worked on Facebook’s ambitious, heat-repurposing Odense data center.

“So what do we want? Do we want the internet or do we not want the internet? At some point that’s fundamentally what the actual question is.”